Insufficiently Anonymized

by wjw on June 14, 2017

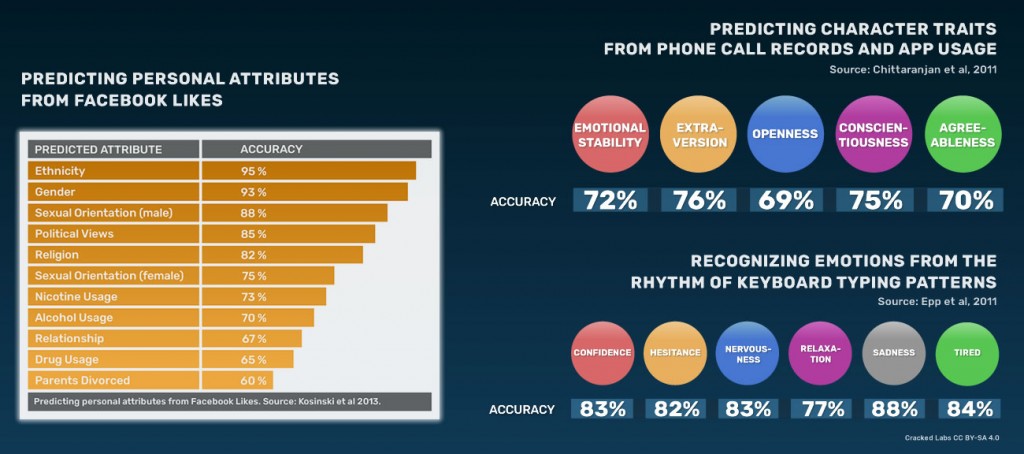

Facebook can recognize your emotions from your keyboard typing patterns. They can work out your religion, your sexual orientation, and whether you use controlled substances with what they claim is a fair degree of accuracy.

And they can make mistakes. A few months ago, I viewed on Amazon a science fiction novel by a gay author, and suddenly Amazon was offering me gay erotica, gay-themed films, and gay-themed calendars. (I had thought that Amazon maintained a Great Wall between erotica and other forms of literature, but apparently not in the case of LGBT fiction.)

So far, so amusing, but there are gay people who are in the closet for damned good reasons, for instance to avoid being murdered by the religious crazies who infest their small, close-minded community, or to avoid imprisonment on behalf of the bare-chested judoka who runs the country. If all those people have to do is look at a person’s Amazon page, or buy some insufficiently-anonymized data from Amazon or Facebook or Oracle, then the closet begins to look a lot more like a prison cell.

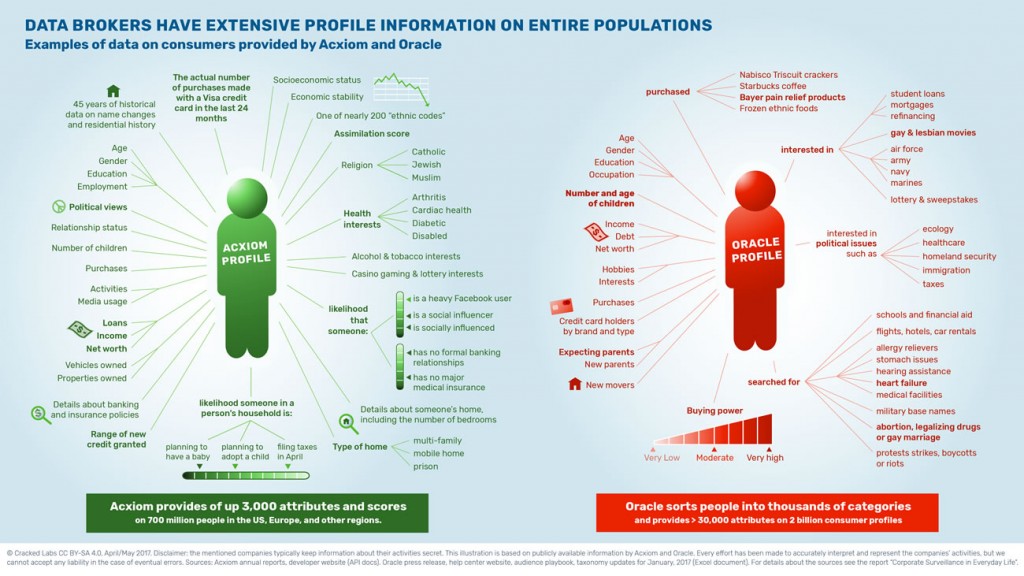

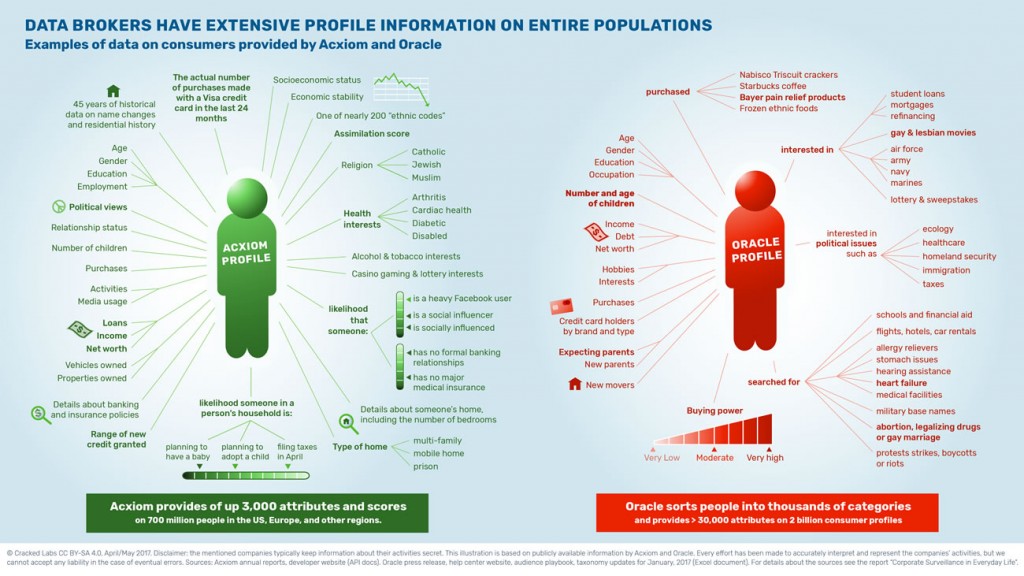

…such methods are already used to sort, categorize, label, assess, rate, and rank people not only for marketing purposes, but also for making decisions in highly consequential areas such as finance, insurance, and healthcare, among others.

They can calculate your creditworthiness without access to your actual financial transactions (which means any credit score generated in such a way is just an educated guess, not necessarily related to reality at all). If a logarithm decides that your lifestyle is cardiac-unhealthy, or if you’re likely to get cancer, your insurance will go up. (Hint: everyone gets cancer, assuming you live long enough, and particularly if you’re male and own a prostate.)

For example, the large insurer Aviva, in cooperation with the consulting firm Deloitte, has predicted individual health risks, such as for diabetes, cancer, high blood pressure and depression, for 60,000 insurance applicants based on consumer datatraditionally used for marketing that it had purchased from a data broker.

The consulting firm McKinsey has helped predict the hospital costs of patients based on consumer data for a “large US payor” in healthcare. Using information about demographics, family structure, purchases, car ownership, and other data, McKinsey stated that such “insights can help identify key patient subgroups before high-cost episodes occur”.

The health analytics company GNS Healthcare also calculates individual health risks for patients from a wide range of data such as genomics, medical records, lab data, mobile health devices, and consumer behavior. The company partners with insurers such as Aetna, provides a score that identifies “people likely to participate in interventions”, and offers to predict the progression of illnesses and intervention outcomes. According to an industry report, the company “ranks patients by how much return on investment” the insurer can expect if it targets them with particular interventions.

But how do they know about your health? Your data’s supposed to be anonymized, right?

Data companies often remove names from their extensive profiles and use hashing to convert email addresses and phone numbers into alphanumeric codes such as “e907c95ef289”. This allows them to claim on their websites and in their privacy policies that they only collect, share, and use “anonymized” or “de-identified” consumer data.

However, because most companies use the same deterministic processes to calculate these unique codes, they should be understood as pseudonyms that are, in fact, much more suitable for identifying consumers across the digital world than real names.

And they know your politics, or think they do. (I once commented on the site of a conservative who posted on Facebook, and was then inundated by offers from every wackadoodle right-wing nutjob with an internet connection. No, I do not want to join your crusade against the Muslim Lizard People, thank you.)

And they know your politics, or think they do. (I once commented on the site of a conservative who posted on Facebook, and was then inundated by offers from every wackadoodle right-wing nutjob with an internet connection. No, I do not want to join your crusade against the Muslim Lizard People, thank you.)

(And of course the Muslim Lizard Crusade might not have been a real thing, but a phony movement designed to discredit conservatives. Though it more often works the other way— many of the really intolerant, obnoxious Berniebros in the last election were conservatives trying to discredit the candidate they thought posed the greatest threat.)

The original purpose of this sort of data collection was to make it easier to sell you stuff that you might want, but now it’s beyond all that. We used to worry about governments doing this sort of thing, but it turns out governments are pretty bad at it, and in any case the government is only interested in the people they’re interested in.

In 1999, Lawrence Lessig famously predicted that left to itself, cyberspace will become a perfect tool of control shaped primarily by the “invisible hand” of the market. He suggested that we could “build, or architect, or code cyberspace to protect values that we believe are fundamental, or we can build, or architect, or code cyberspace to allow those values to disappear”. Today, the latter has nearly been made reality by the billions of dollars in venture capital poured into funding business models based on the unscrupulous mass exploitation of data. The shortfall of privacy regulation in the US and the absence of its enforcement in Europe has actively impeded the emergence of other kinds of digital innovation, that is, of practices, technologies, and business models that preserve freedom, democracy, social justice, and human dignity.

So far internet commerce is all about building monopolies. Anything about democracy and human dignity in that?

Via Chairman Bruce, a brief survey of what the corporate oligarchy knows about me, you, and everyone else.

Via Chairman Bruce, a brief survey of what the corporate oligarchy knows about me, you, and everyone else. And they know your politics, or think they do. (I once commented on the site of a conservative who posted on Facebook, and was then inundated by offers from every wackadoodle right-wing nutjob with an internet connection. No, I do not want to join your crusade against the Muslim Lizard People, thank you.)

And they know your politics, or think they do. (I once commented on the site of a conservative who posted on Facebook, and was then inundated by offers from every wackadoodle right-wing nutjob with an internet connection. No, I do not want to join your crusade against the Muslim Lizard People, thank you.)